Google vs Open AI

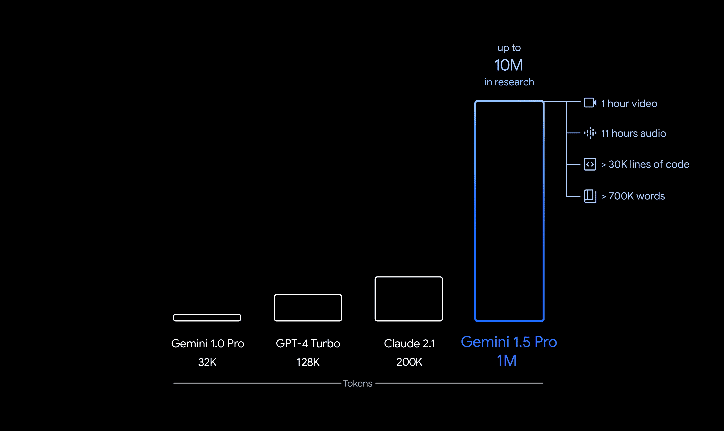

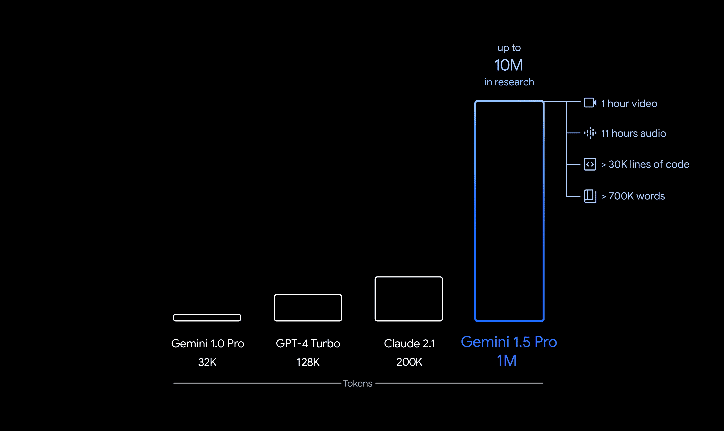

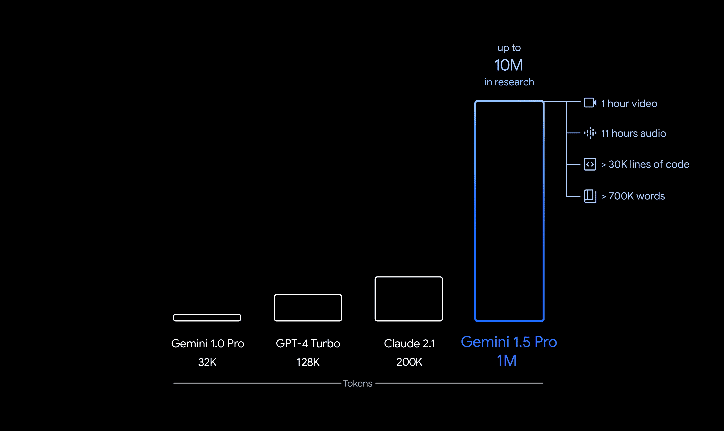

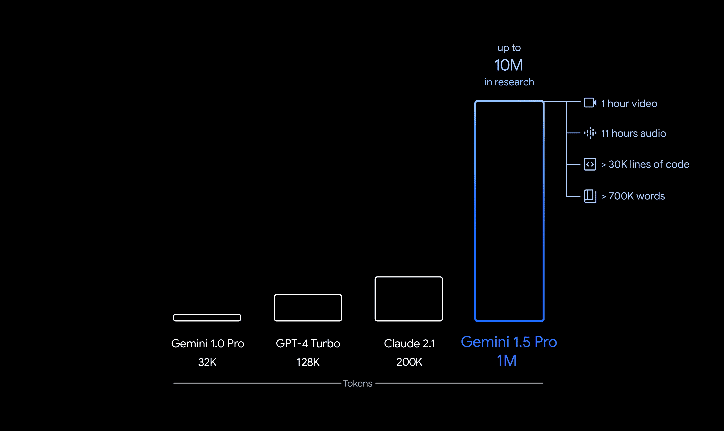

The battle is getting intense - Google Gemini 1.5 has crushed ChatGPT (also Claude) with the largest-ever 1 Mn token context window.

Gemini 1.5 has 10 times the power of ChatGPT but Sora stole it's thunder

Although this is a ground breaking discovery, refer to image below:

But OpenAI stole Google's thunder with Sora.

Prompt: A litter of golden retriever puppies playing in the snow. Their heads pop out of the snow, covered in.

Output:

How did it all start?

The world's first AI model capable of generating video from text is "Make-A-Video" developed by Meta AI (formerly Facebook AI). This groundbreaking technology was officially announced in September 2022. "Make-A-Video" leverages advanced AI techniques to create short video clips based on textual descriptions, representing a significant advancement in the field of generative AI and multimedia content creation.

What's happening now?

This is where things get interesting. If you closely look at the videos generated by Sora - refer to the video (3:34) below the lady’s nose is a bit weird. We have seen these similar issues even with AI Image generators as well and yet there are thousands of them available now.

AI can’t produce movies just yet if that’s what you are wondering but what it can potentially disrupt two key areas:

Storyboarding: Imagine if I needed to do a video showcasing a journey of a startup founder and how he found his eureka moment, instead of looking at sketches from an artist, I’d be looking at concepts of individual scenes before finally deciding to shoot a particular one. This could potentially save hundreds of hours for VFX Teams and videographers

Later Effects (paying homage to our legendary software After Effects): When you’re working with an ad campaign video but at the last minute an executive suggests a minor change in one of the scenes, that could potentially be addressed by Sora and save time for video teams.

If you are somebody who is already working with text-to-video AI’s, reach out to me. I’d like to know more on how it’s changing your work everyday.

Although this is a ground breaking discovery, refer to image below:

But OpenAI stole Google's thunder with Sora.

Prompt: A litter of golden retriever puppies playing in the snow. Their heads pop out of the snow, covered in.

Output:

How did it all start?

The world's first AI model capable of generating video from text is "Make-A-Video" developed by Meta AI (formerly Facebook AI). This groundbreaking technology was officially announced in September 2022. "Make-A-Video" leverages advanced AI techniques to create short video clips based on textual descriptions, representing a significant advancement in the field of generative AI and multimedia content creation.

What's happening now?

This is where things get interesting. If you closely look at the videos generated by Sora - refer to the video (3:34) below the lady’s nose is a bit weird. We have seen these similar issues even with AI Image generators as well and yet there are thousands of them available now.

AI can’t produce movies just yet if that’s what you are wondering but what it can potentially disrupt two key areas:

Storyboarding: Imagine if I needed to do a video showcasing a journey of a startup founder and how he found his eureka moment, instead of looking at sketches from an artist, I’d be looking at concepts of individual scenes before finally deciding to shoot a particular one. This could potentially save hundreds of hours for VFX Teams and videographers

Later Effects (paying homage to our legendary software After Effects): When you’re working with an ad campaign video but at the last minute an executive suggests a minor change in one of the scenes, that could potentially be addressed by Sora and save time for video teams.

If you are somebody who is already working with text-to-video AI’s, reach out to me. I’d like to know more on how it’s changing your work everyday.

Although this is a ground breaking discovery, refer to image below:

But OpenAI stole Google's thunder with Sora.

Prompt: A litter of golden retriever puppies playing in the snow. Their heads pop out of the snow, covered in.

Output:

How did it all start?

The world's first AI model capable of generating video from text is "Make-A-Video" developed by Meta AI (formerly Facebook AI). This groundbreaking technology was officially announced in September 2022. "Make-A-Video" leverages advanced AI techniques to create short video clips based on textual descriptions, representing a significant advancement in the field of generative AI and multimedia content creation.

What's happening now?

This is where things get interesting. If you closely look at the videos generated by Sora - refer to the video (3:34) below the lady’s nose is a bit weird. We have seen these similar issues even with AI Image generators as well and yet there are thousands of them available now.

AI can’t produce movies just yet if that’s what you are wondering but what it can potentially disrupt two key areas:

Storyboarding: Imagine if I needed to do a video showcasing a journey of a startup founder and how he found his eureka moment, instead of looking at sketches from an artist, I’d be looking at concepts of individual scenes before finally deciding to shoot a particular one. This could potentially save hundreds of hours for VFX Teams and videographers

Later Effects (paying homage to our legendary software After Effects): When you’re working with an ad campaign video but at the last minute an executive suggests a minor change in one of the scenes, that could potentially be addressed by Sora and save time for video teams.

If you are somebody who is already working with text-to-video AI’s, reach out to me. I’d like to know more on how it’s changing your work everyday.

Although this is a ground breaking discovery, refer to image below:

But OpenAI stole Google's thunder with Sora.

Prompt: A litter of golden retriever puppies playing in the snow. Their heads pop out of the snow, covered in.

Output:

How did it all start?

The world's first AI model capable of generating video from text is "Make-A-Video" developed by Meta AI (formerly Facebook AI). This groundbreaking technology was officially announced in September 2022. "Make-A-Video" leverages advanced AI techniques to create short video clips based on textual descriptions, representing a significant advancement in the field of generative AI and multimedia content creation.

What's happening now?

This is where things get interesting. If you closely look at the videos generated by Sora - refer to the video (3:34) below the lady’s nose is a bit weird. We have seen these similar issues even with AI Image generators as well and yet there are thousands of them available now.

AI can’t produce movies just yet if that’s what you are wondering but what it can potentially disrupt two key areas:

Storyboarding: Imagine if I needed to do a video showcasing a journey of a startup founder and how he found his eureka moment, instead of looking at sketches from an artist, I’d be looking at concepts of individual scenes before finally deciding to shoot a particular one. This could potentially save hundreds of hours for VFX Teams and videographers

Later Effects (paying homage to our legendary software After Effects): When you’re working with an ad campaign video but at the last minute an executive suggests a minor change in one of the scenes, that could potentially be addressed by Sora and save time for video teams.

If you are somebody who is already working with text-to-video AI’s, reach out to me. I’d like to know more on how it’s changing your work everyday.